Power of Foresight

Behind Llama3: Meta’s Strategic Play in AI’s Open Access Era

Power of Foresight

Behind Llama3: Meta’s Strategic Play in AI’s Open Access Era

Digital Trust in Peril

The spread of fake news is eroding our faith in the digital systems that we rely on for information. If we cannot trust the news stories that we read online, how can we trust the social media platforms, search engines, and other digital systems that serve as gatekeepers for this information?

The technological advancements in artificial intelligence have revolutionized the way we consume and process information. This revolution, however, carries with it an insidious threat that could fundamentally erode our trust in digital systems—the misuse of AI technology to generate and disseminate fake news.

Fake news has become an increasingly pervasive problem. With advancements in AI, it is now easier than ever to craft and spread news stories that are indistinguishable from authentic reporting. A simple modification in the algorithms that control how AI systems interpret and present information can enable malicious actors to produce highly convincing fake news. These fabricated stories can deceive even the most astute readers, sowing confusion and mistrust.

The Profound Impact of Misinformation

The implications of AI misused in this way are profound. The spread of fake news undermines our confidence in the digital platforms we depend on for information. If the authenticity of online news is consistently in question, how can we trust the social media platforms, search engines, and other digital gatekeepers that curate this content? This erosion of trust doesn't stop with news and media—it seeps into all aspects of our digital lives, affecting how we bank, shop, and communicate.

More alarmingly, the degradation of digital trust could compel us to revert to outdated, paper-based sources of information like books and newspapers, negating the progress we've made in digital information management. This shift would not only disrupt how we interact with each other but would also slow down our communication and information-sharing capabilities drastically.

A Glimpse into a Dystopian Future

Imagine a society where distrust in digital information is the norm, forcing people back to slower, less efficient modes of communication. In such a scenario, coordinating responses to natural disasters or other crises could become perilously slow, potentially leading to catastrophic outcomes.

This breakdown in digital trust might also fuel the rise of extremist groups and the proliferation of conspiracy theories. Without reliable sources of information, people might become more susceptible to misinformation, leading to increased social polarization and conflict. The resultant division could further erode the social fabric of our communities.

Moreover, the potential loss of technological progress and innovation is a grave concern. The slowdown in information accessibility could hinder scientific and medical advancements, where timely access to data can be crucial for saving lives.

In an age where information shapes realities, safeguarding the integrity of our digital conversations isn't just prudent—it's imperative. As AI redefines the boundaries of possible, let us ensure it fortifies trust rather than fractures it

Join Us in Safeguarding Digital Trust

At the Global AI Transformation Institute, we are committed to addressing these challenges by promoting responsible AI use and educating the public about the risks and safeguards related to AI-generated content. We invite you to join us in this crucial dialogue to protect and restore trust in our digital landscapes.

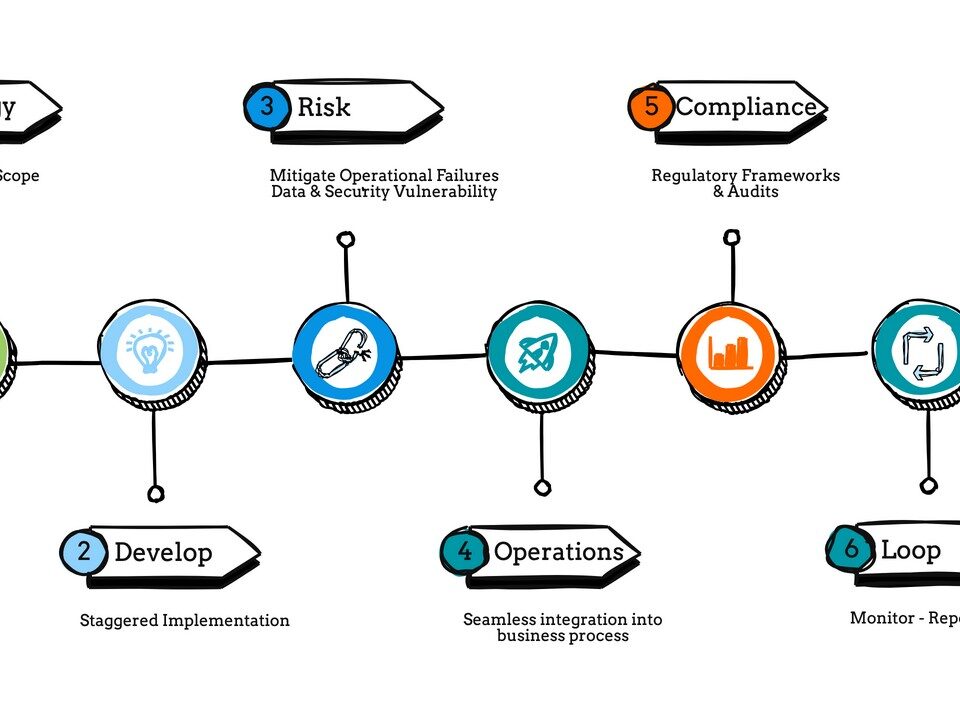

Together, we can advocate for stronger regulatory frameworks, develop robust AI detection tools, and educate users on identifying misinformation. Let's work collectively to ensure that AI remains a force for good, enhancing our digital experiences rather than compromising them.